Data Flows

Data Flows is a visual tool within the Zeta Marketing Platform (ZMP) that can ingest and integrate any data source either one time or as an ongoing feed. With Data Flows, you can seamlessly manage data workflows and conduct master data management tasks like cleansing, accuracy checks, resolving duplicates, and flexible extract, transform and load (ETL) functions through a straightforward, low-code user interface. Furthermore, use this powerful feature to:

Accelerate the speed of data integration and onboarding to drive timelier customer engagement

Minimize or eliminate the need for technical intervention to manage your own data on your own terms

Harmonize your data so that your systems are connected and speaking the same language

Simplify your data operations and gain complete transparency into your most valuable asset—your customer data

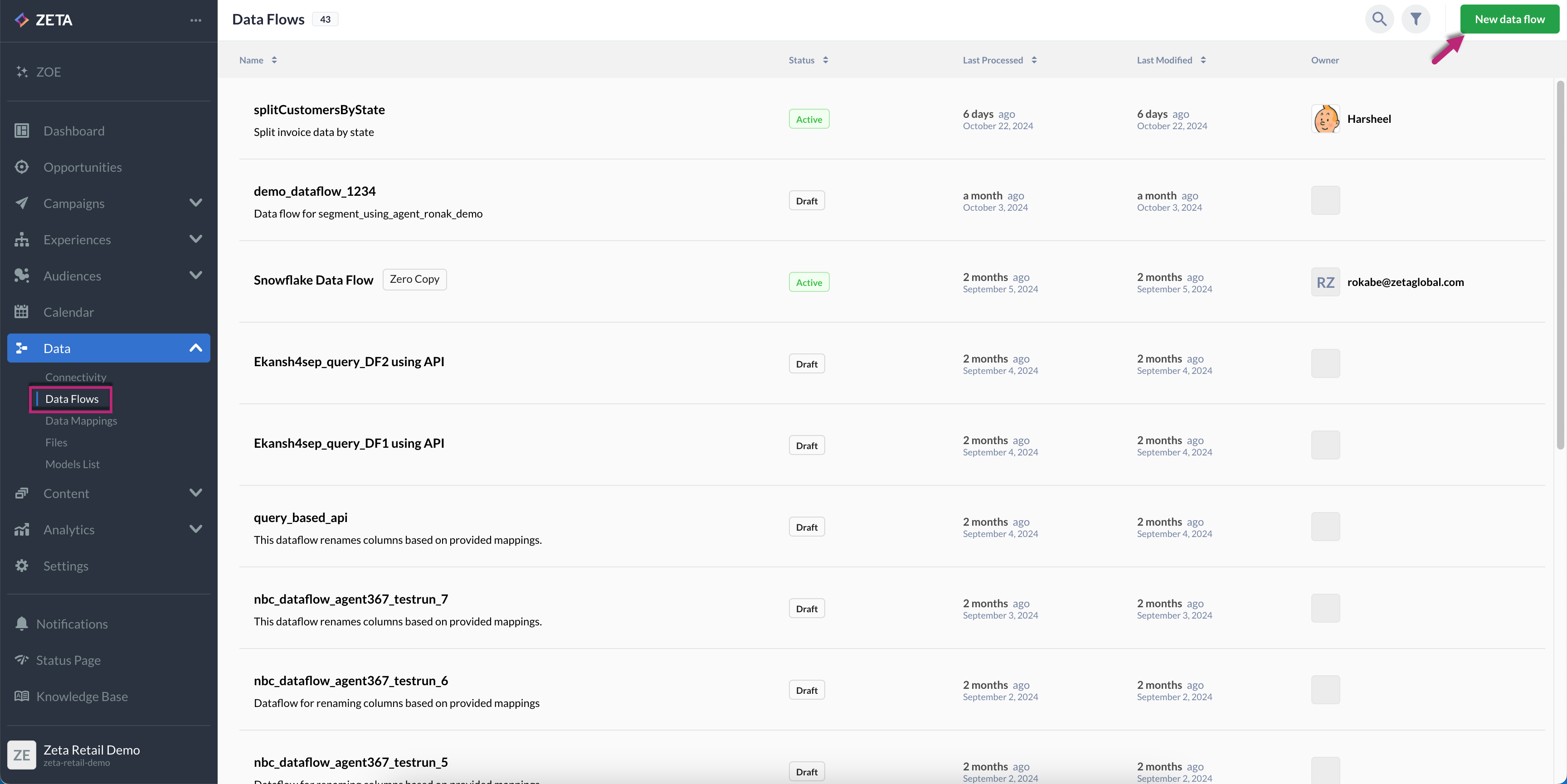

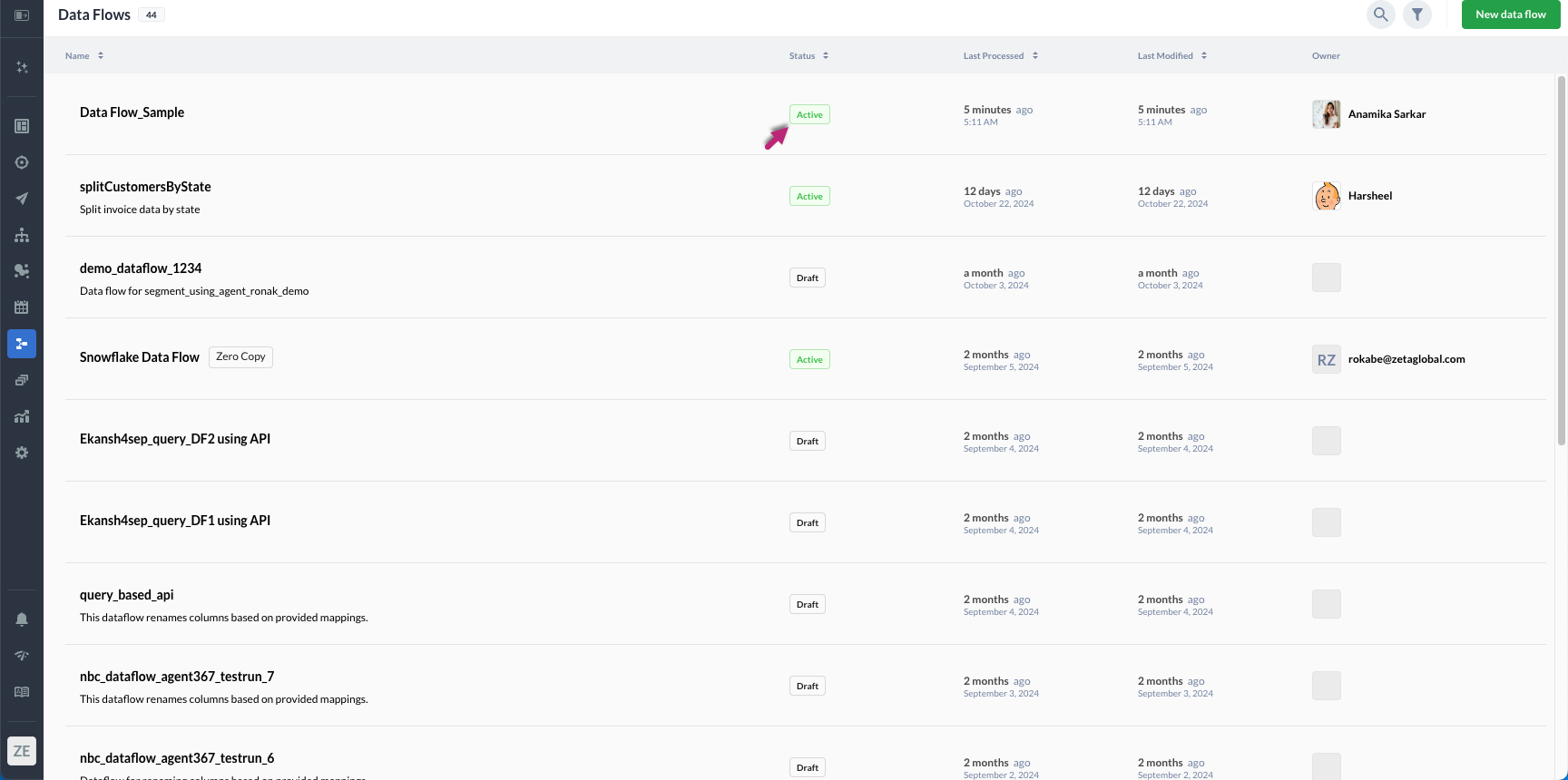

From the menu on the left, navigate to Data > Data Flows. Click on New Data Flow to open the canvas.

ZMP data flows are currently comprised of these three 3 components: Source, Destination, and Action.

Source Of Data (required) | Destination of Data (required) | Actions on the Data (optional) |

|---|---|---|

This is where you will be pulling the data from. | This is where you will be sending the data to. | These are various actions that can be taken on the data to ensure that the data has everything it needs for use by the destination of the data. |

Everyone to have access to all sources | Everyone to have access to all sources | Some Data Actions will be restricted to certain accounts if built custom for the client, or if those actions require payment. |

Types of Sources

| Types of Destinations

| Available Actions (changes often)

|

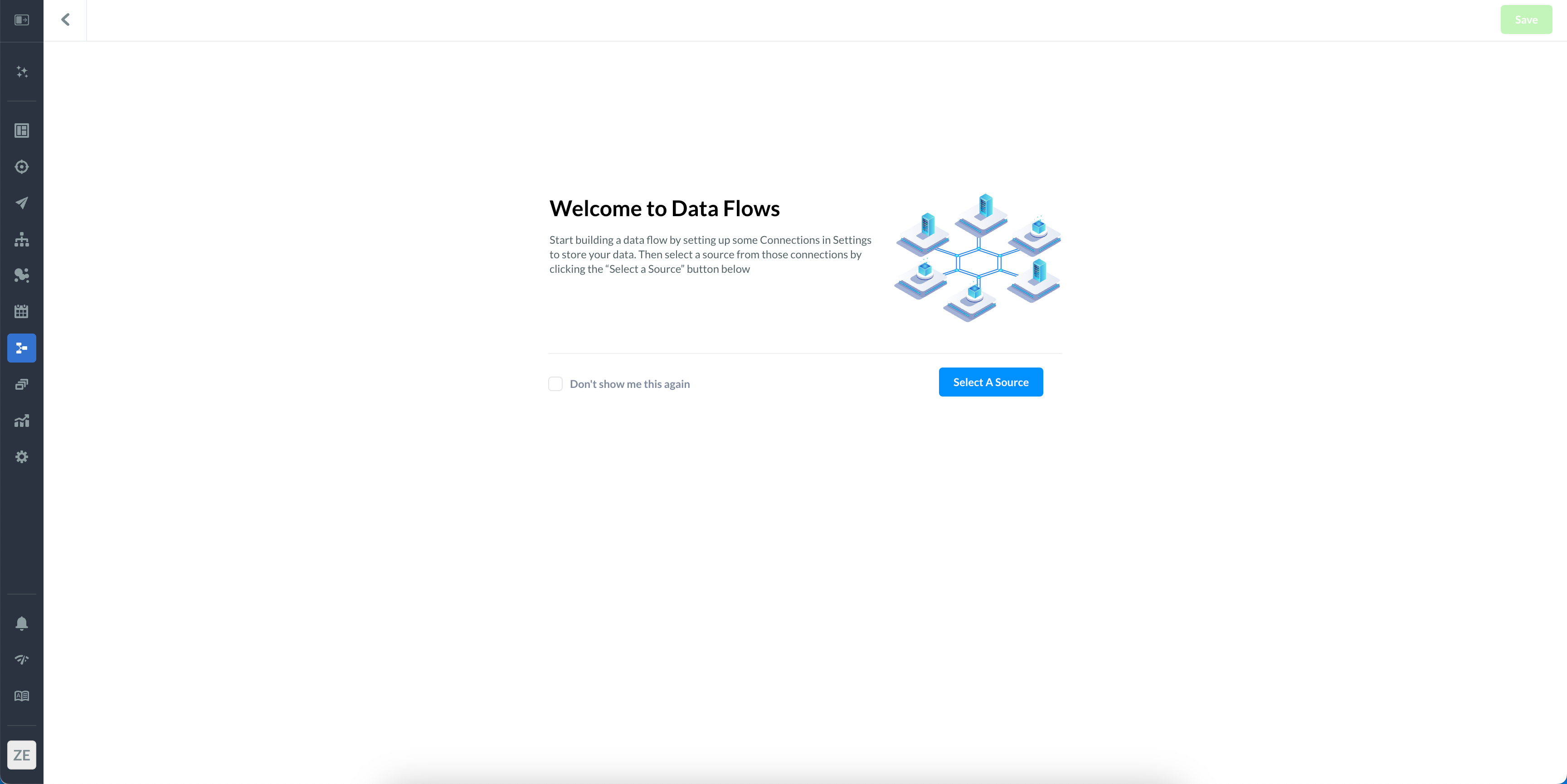

Selecting the Source

Click on Select Source, and choose your desired option from the list. Keep clicking on Next to add your ideal source file and feed options.

-20241029-104130.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

Click here to see how the Amazon S3 Buckets, Google Buckets, Microsoft Azure Blob Storage, and External SFTP can be configured for self-serve connection management.

You can also schedule the execution of Snowflake queries and Google BigQuery queries. The schedule options available are: Every 5 minutes, every 15 minutes, hourly, daily, weekly, and monthly.

-20241029-104620.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

Any queries created for either Snowflake or BigQuery from within Data Flows can only be used in those flows. Query Segments created from the Segments and Lists page can be used in Data Flows as well.

Encrypted files can be decrypted in the Source card using a dropdown of private keys from Settings > Keys. Outgoing files can be encrypted in the Destination card using a dropdown of public keys from Settings > Keys.

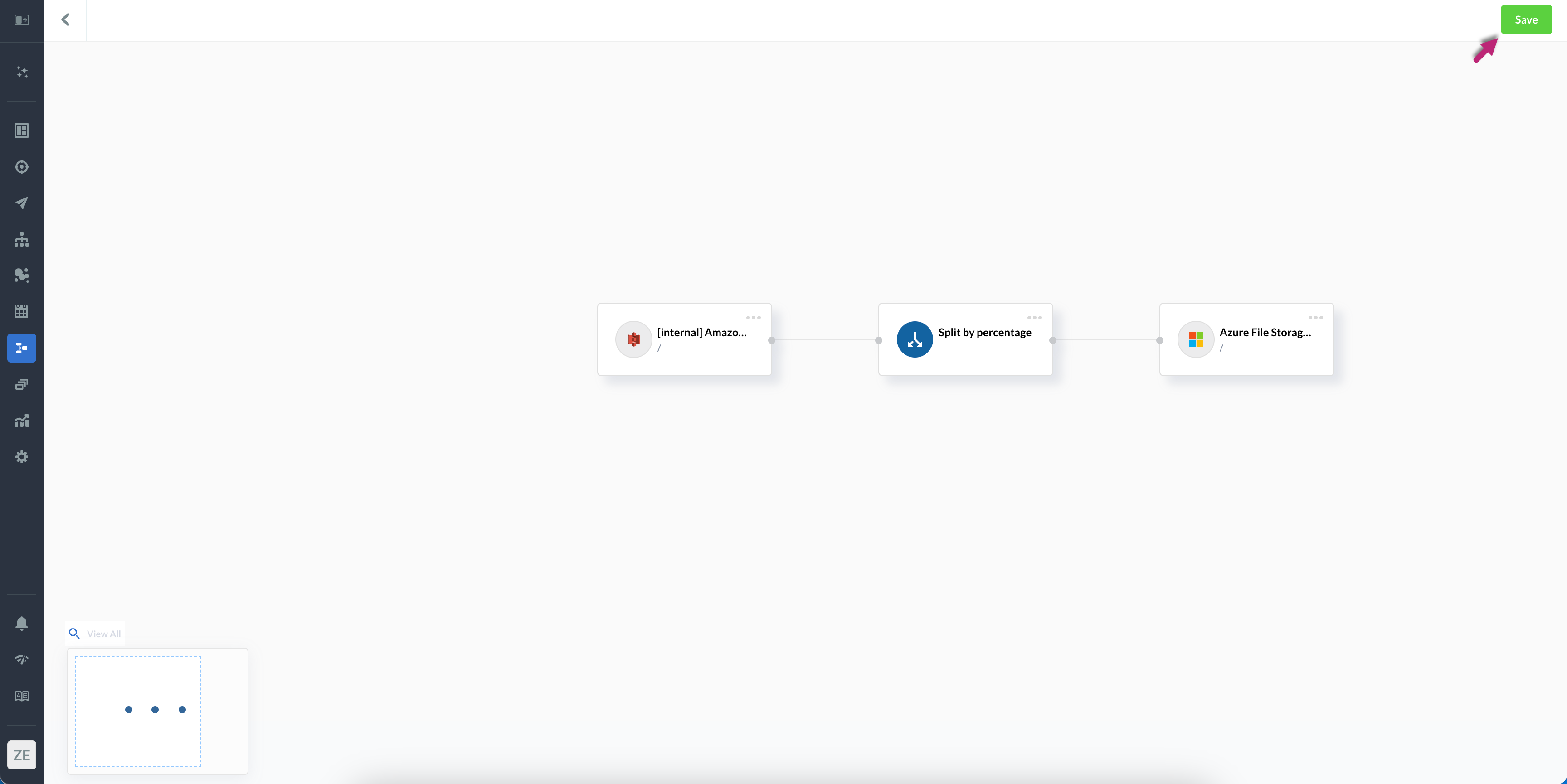

Deciding the Action

Once you’ve added the source to the canvas, click on the ![]() button to add action(s) that can be used to manipulate the records or fields in the data before the data reaches the destination.

button to add action(s) that can be used to manipulate the records or fields in the data before the data reaches the destination.

-20241029-104848.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

You can add multiple actions one after the other.

-20241029-105312.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

Choosing the Destination

After you’ve selected the data actions, click on the ![]() button to select a destination from the list.

button to select a destination from the list.

-20241029-105759.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

Visualize Output Data

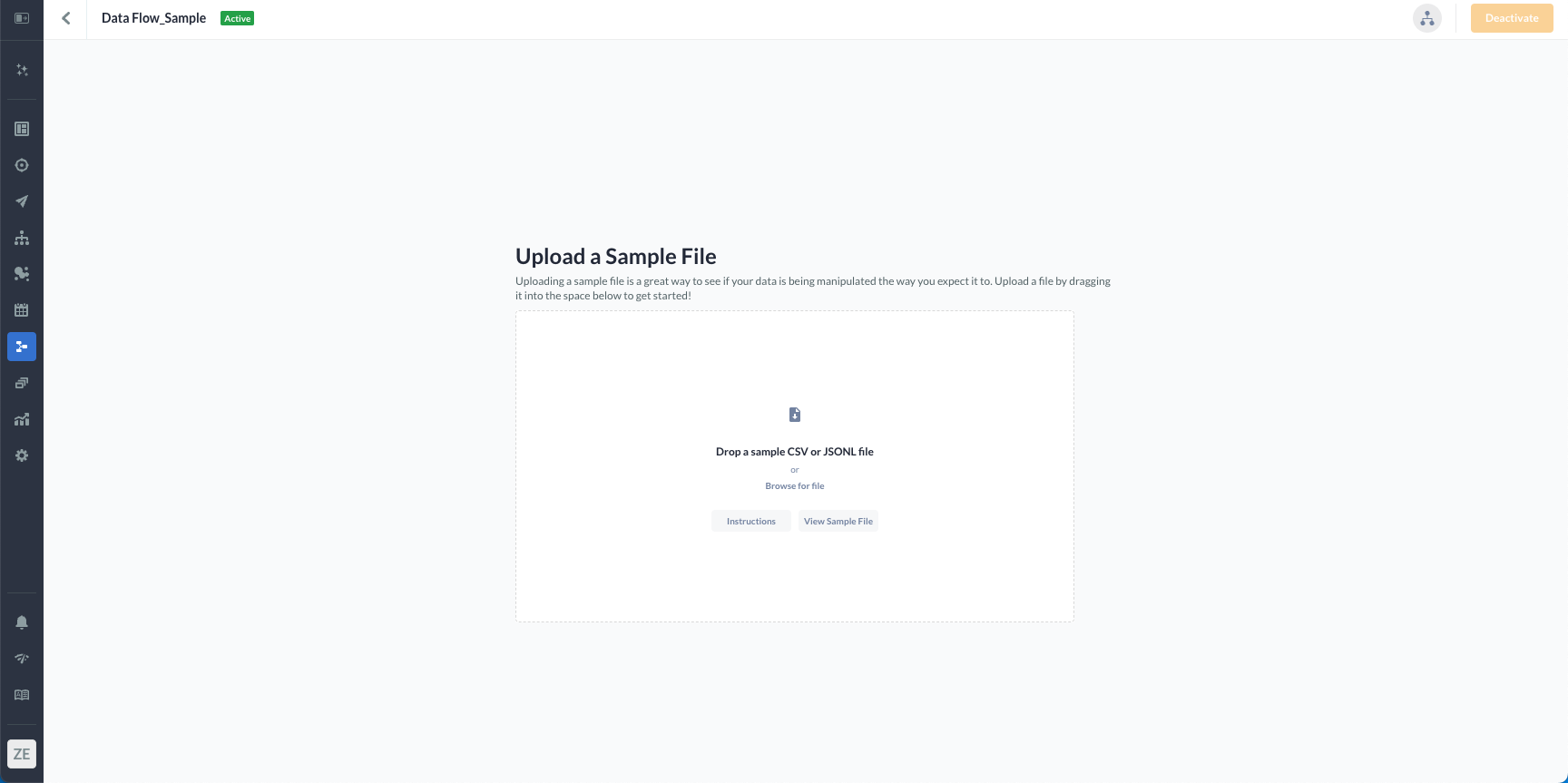

You can upload a sample file in order to visualize the end result file, which will have been modified after passing through the data flow’s action nodes.

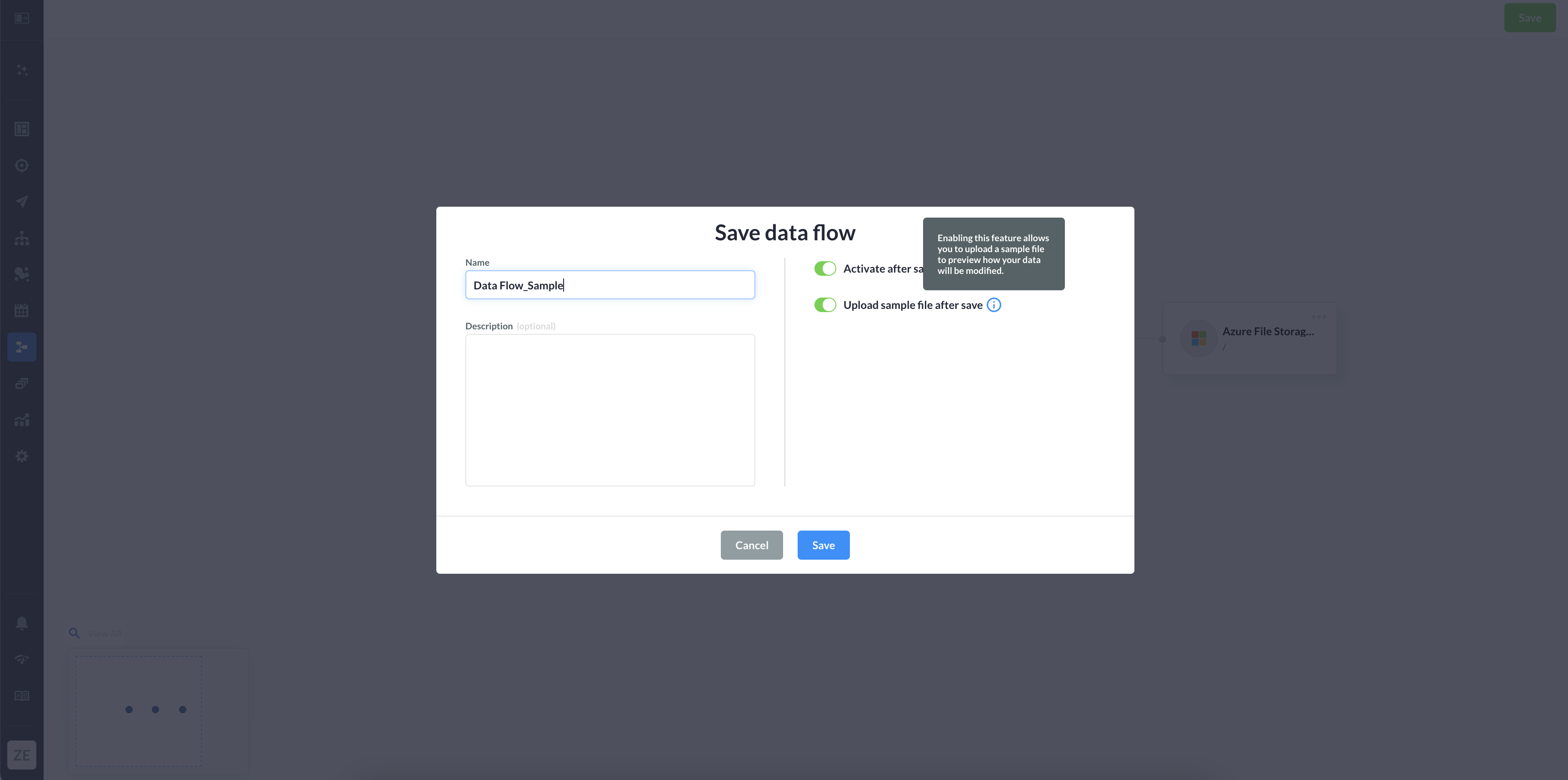

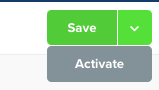

1. After finishing the entire flow within the data flow canvas, click on Save.

2. You can select the Activate after save toggle or Upload sample file after save or both.

The allowed file types for upload are CSV and JSONL.

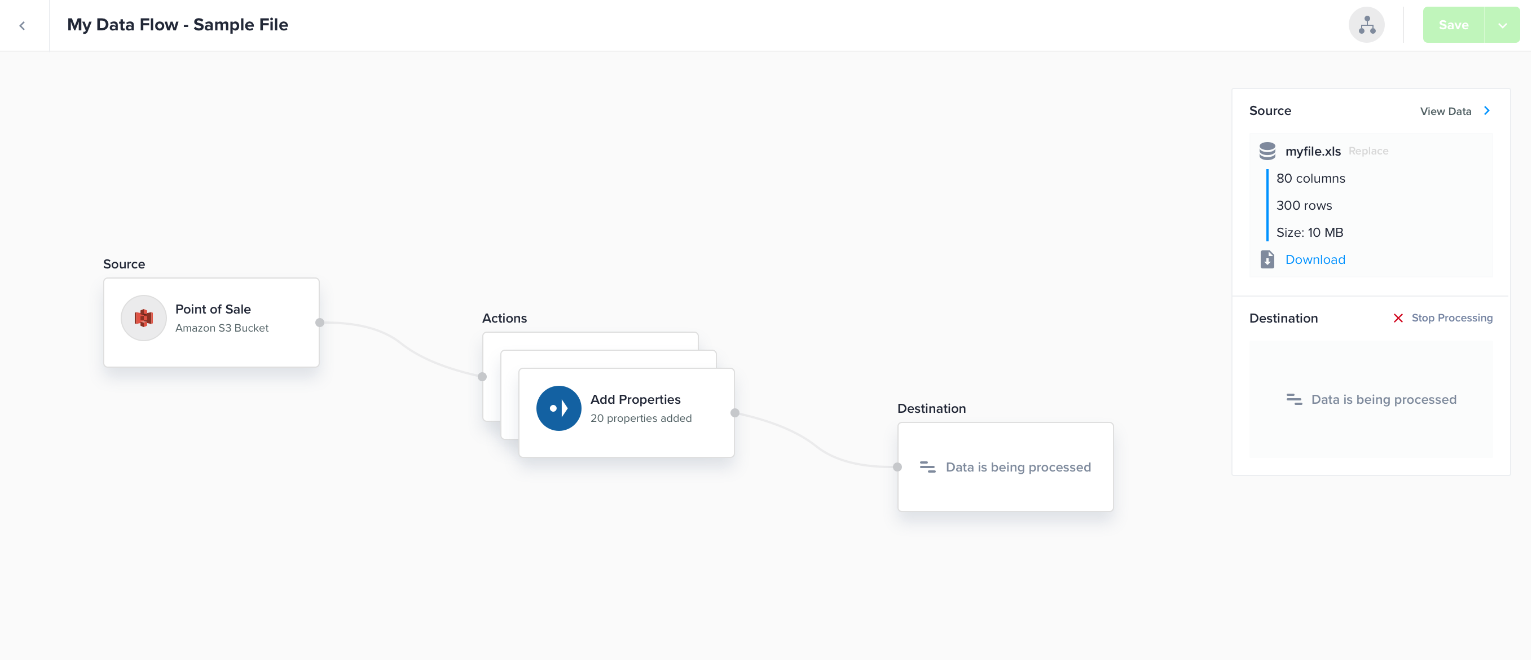

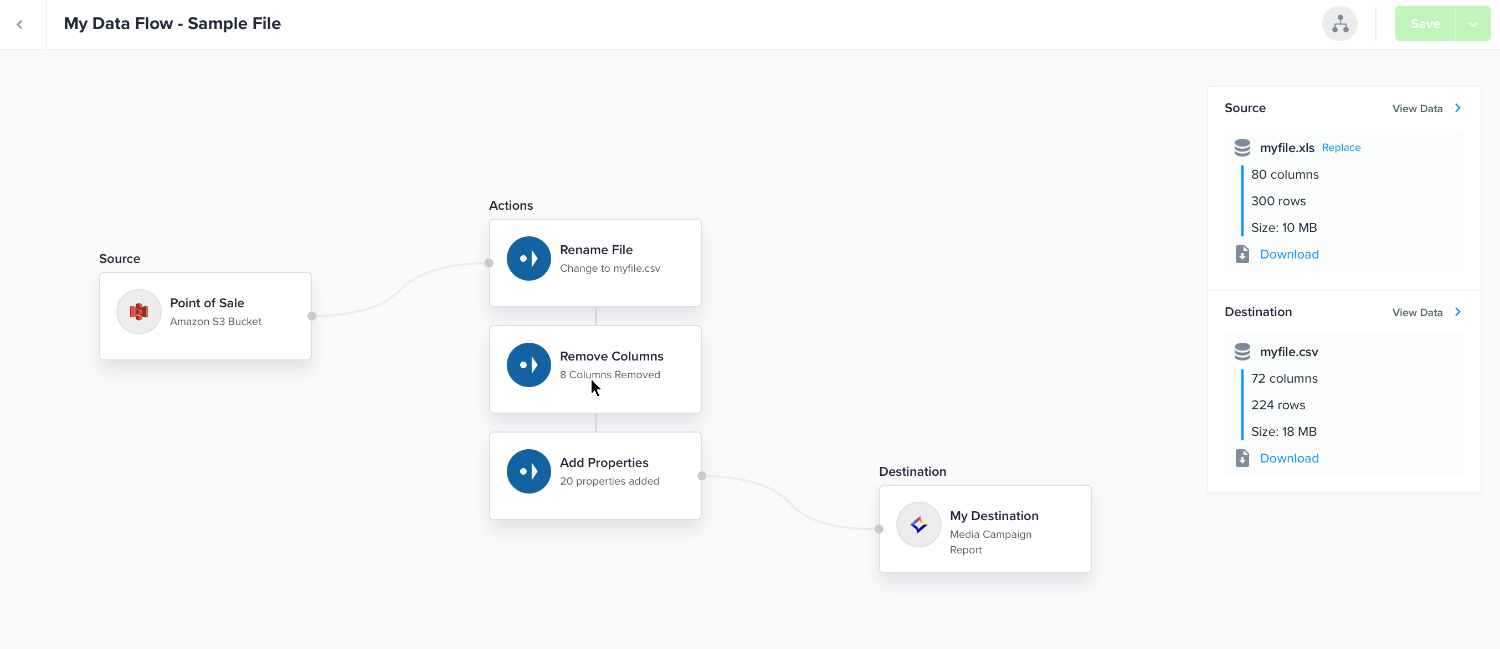

2. Based on the sample file uploaded:

the action cards will be stacked,

source summary will be displayed at the top-right, and

the destination card will show that the data is being processed.

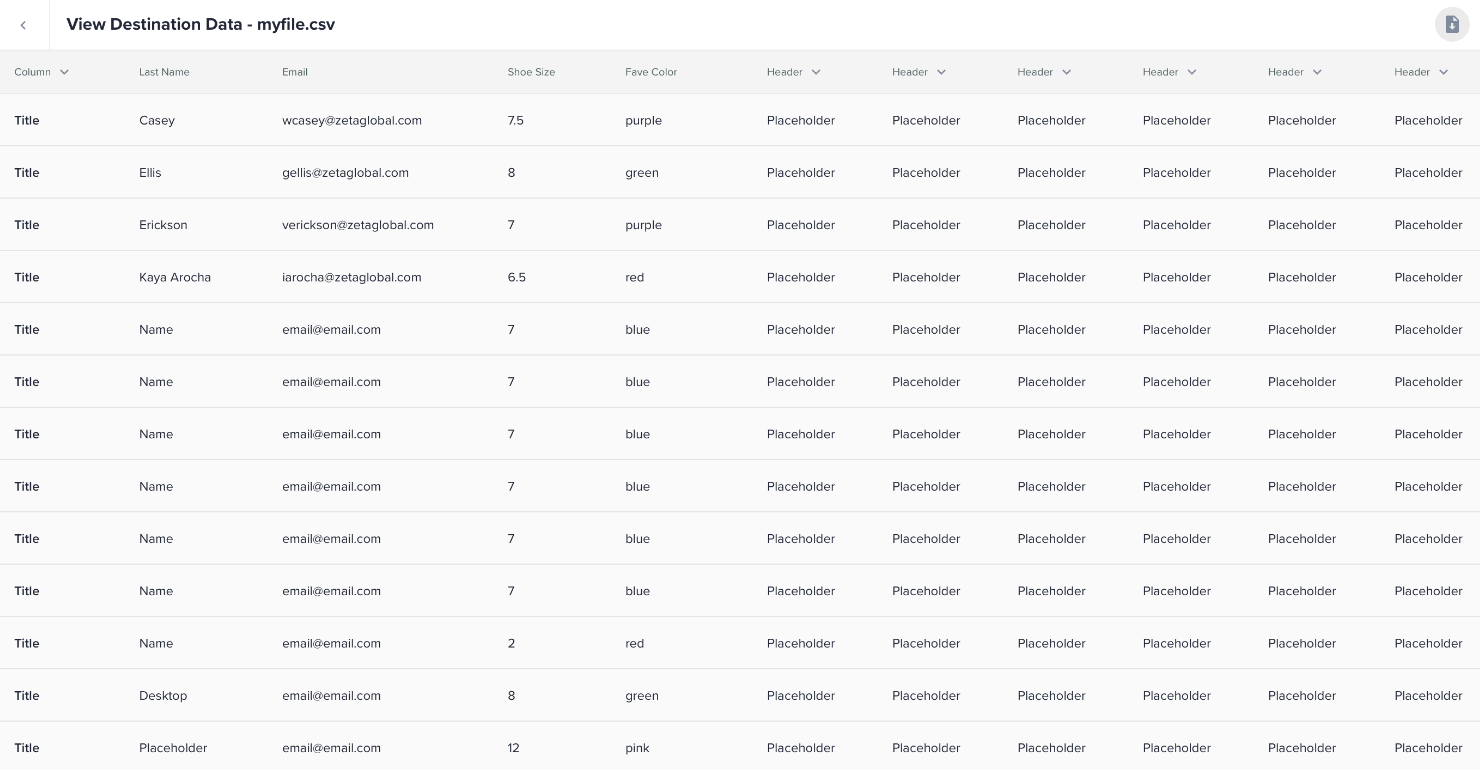

|

3. While the summary stats of uploaded source files and destination files are displayed, click on View Data to view the source data OR destination data in the table view mode.

The data flow canvas is NOT editable if you’ve chosen the Activate after save toggle since you will need to deactivate the flow before editing (existing behavior).

4. If you had chosen the Upload sample file after save, the data flow is editable and in a Draft state. Click on Save/Activate.

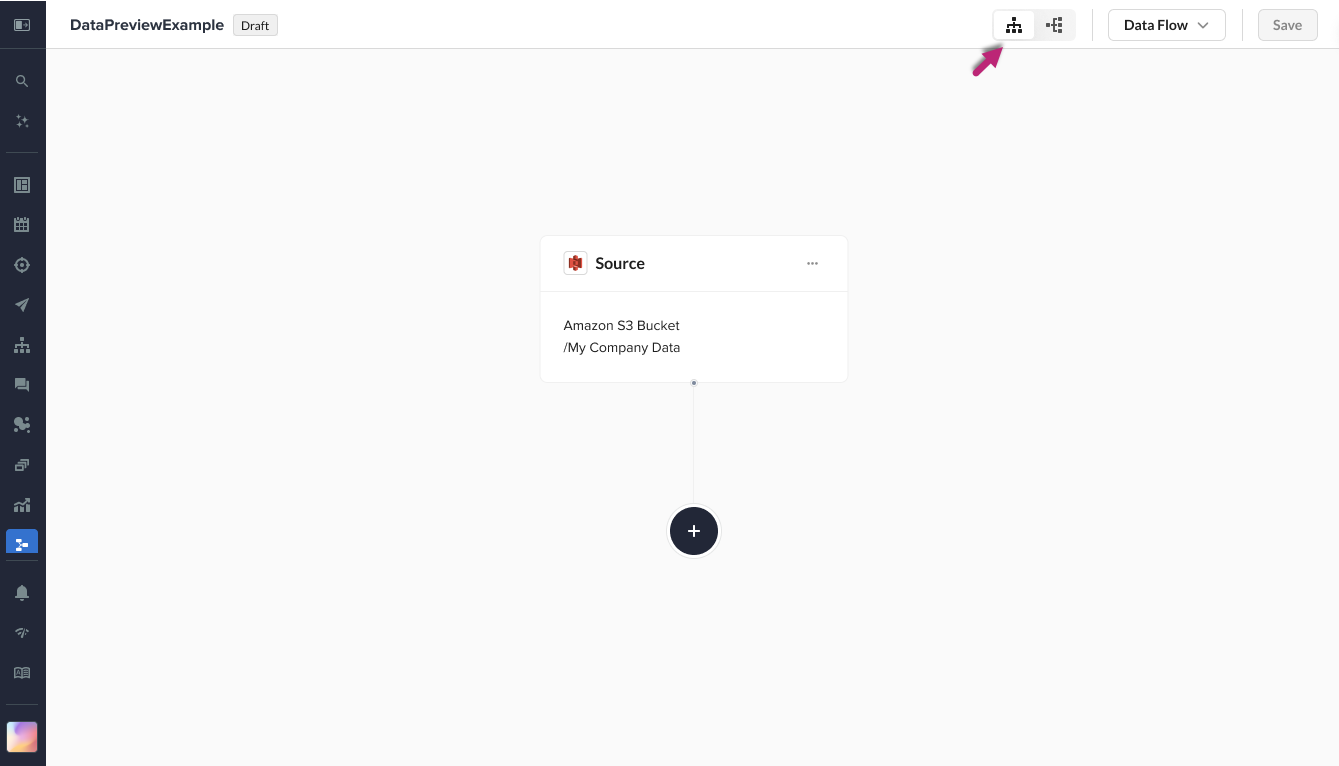

5. The data flow list view will show the status of your data flow.

Clicking on the flow, you can navigate back to the canvas.

For Snowflake and Google BigQuery sources within a data flow, ZMP provides you with greater control over the flow's execution behavior. This feature allows you to decide whether the flow should run immediately upon activation or adhere strictly to the schedule defined in the source card. By default, the toggle for this feature is set to "true," ensuring the flow runs immediately upon activation to maintain backward compatibility. This option is presented when activating a flow for the first time (via the save modal on the data flow canvas or through the options menu next to the data flow name) and when reactivating a previously deactivated flow. To enable this feature in your accounts, please contact Zeta Support, as it is not yet generally available. |

View Data Flows

You can switch the Data Flow canvas view from default horizontal to vertical and back via a segmented control UI icon on the canvas itself.

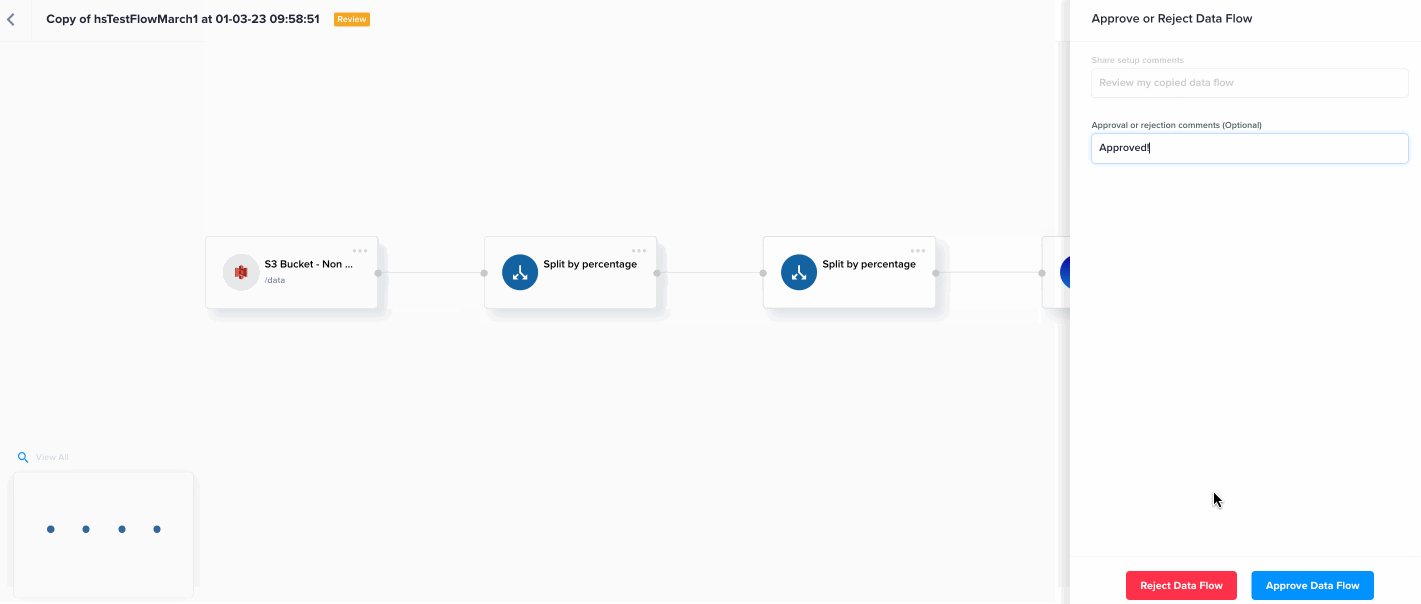

Share Data Flows

Reach out to your account team if you want this functionality enabled for your ZMP instance.

You can share data flows across accounts and copy feeds.

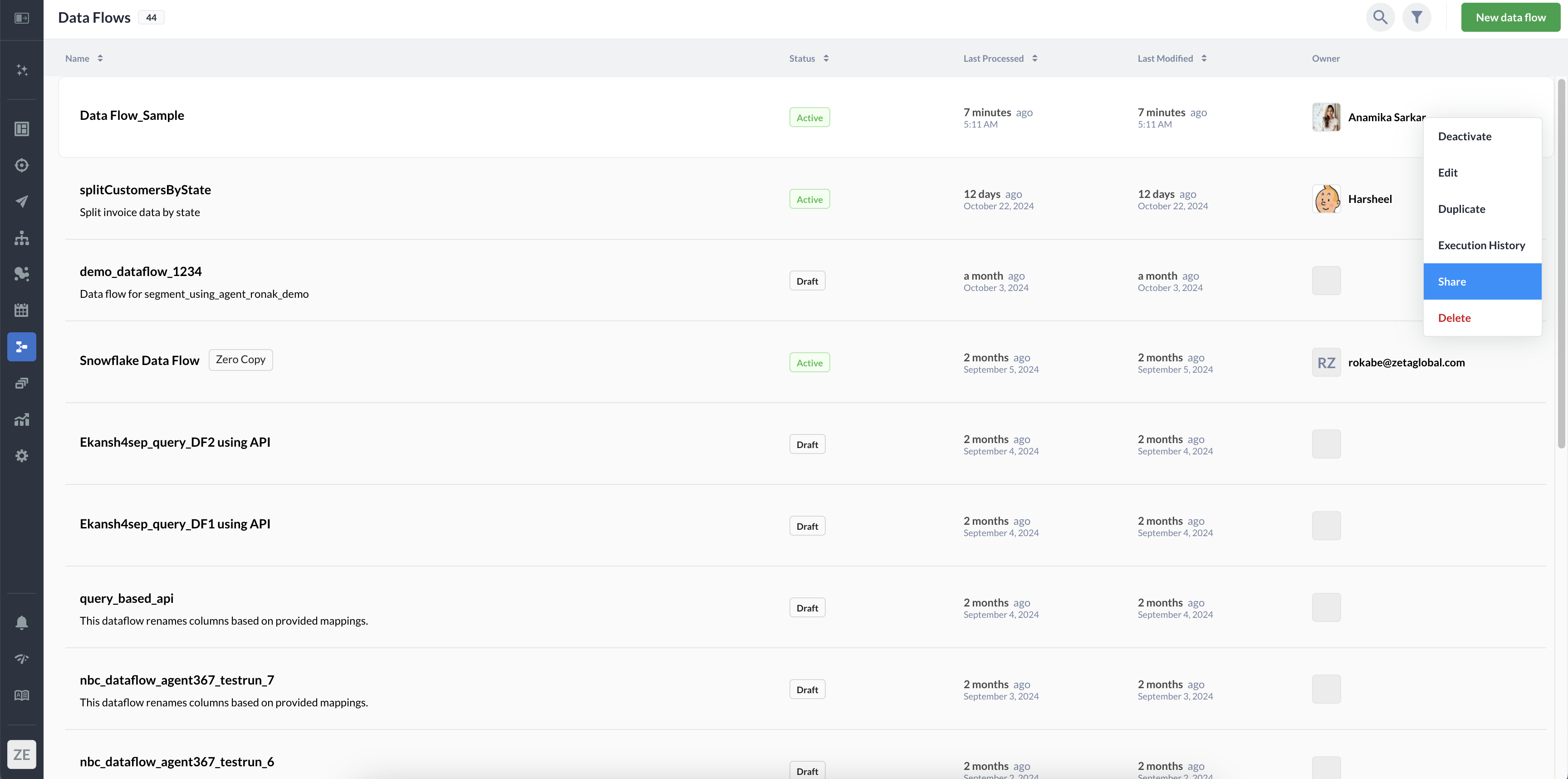

1. From the data flows list view, click on the action menu against the data flow you wish to share and select Share.

2. As the Share Data Flow panel slides in from the right, click on Share to Another Account.

-20241104-110341.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

Click on Add Account and select the destination account from the scrollable list that appears.

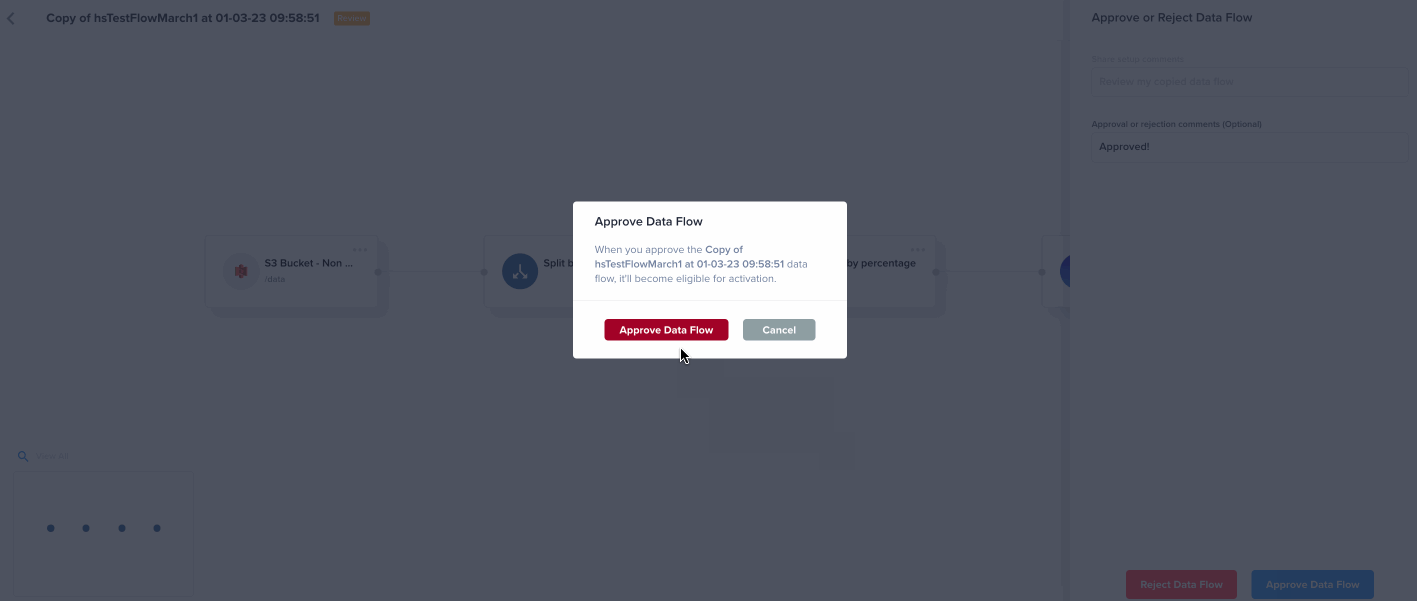

Once the destination account has been selected, navigate to the Approvals section and enter the Approver email address(es) to add people who can review the flow in the destination account.

Click on Share Data Flow. In the pop-up, click on Share to Account.

Once approved, the approver can Activate the data flow in the destination account. |

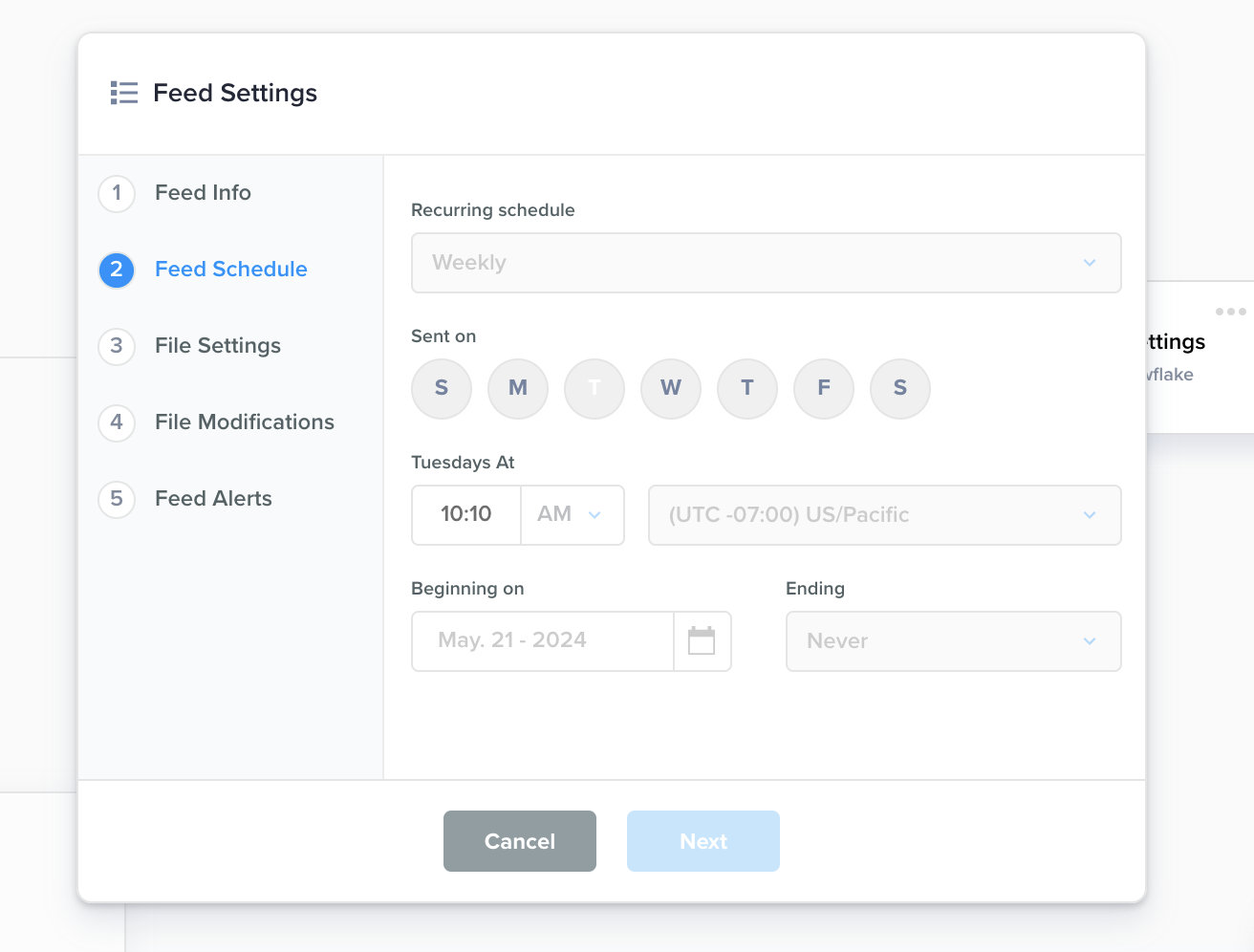

Data Flow Feed Schedule

As per the data flow feed schedule, if the start date is not set, the data flow will pick up the data. However, once the start date is set, the flow will no longer search for any files uploaded prior to that date. Therefore, uploading a file before the scheduled date would not result in processing it; it should be done after the start date.

Once the start date is set, the data flow won't look for any files uploaded earlier than the start date.

Data Flow State Change Notifications

Email notifications are sent out under the following scenarios:

Data Flow is Activated

Data Flow is Deactivated

Data Flow is Shared from one account to another

Data Flow is requested to be approved

Data Flow is Approved

Data Flow is Rejected

In all these cases, users who are on the ZMP notification list will receive these emails as well as users whose emails were added in the Notifications or Approval steps when a data flow is shared.

Users who do not want to receive these emails will be able to remove themselves and even add back on in the future if their needs change.

Execution History

Execution History provides a running real-time list of all executions that are in progress or happened in the past.

From the data flows list view, click on the action menu against the data flow and click on Execution History.

The flow could be Running, Completed with Success, or Completed with failure.

A list of metrics such as input file name, row count, etc will also be displayed.

There is also a badge that shows up when a data flow fails. The badge remains till the next 10 runs execute successfully at which point it clears up. The badge is clickable, and upon clicking navigates the user automatically to the newest error.

.gif?inst-v=4705ef90-7561-468c-b13d-4eaf9227de36)

You can also use the search icon on the right as well as the date filter.