Zeta + Google BigQuery: Clean Room Data Onboarding Guide

Zeta supports integration with Google BigQuery for seamless data access and segmentation within the Zeta Marketing Platform (ZMP). This guide will help you set up a secure connection using service account credentials and key pair authentication.

Prerequisites:

Before you begin, ensure that External databases are enabled for your ZMP account.

This requires submitting a support ticket to Zeta to enable the

ext_db_segmentation_enabledflag on your account.

Setting Up the BigQuery Connection

Once external databases are enabled:

1. Navigate to Settings > Integrations > Connections.

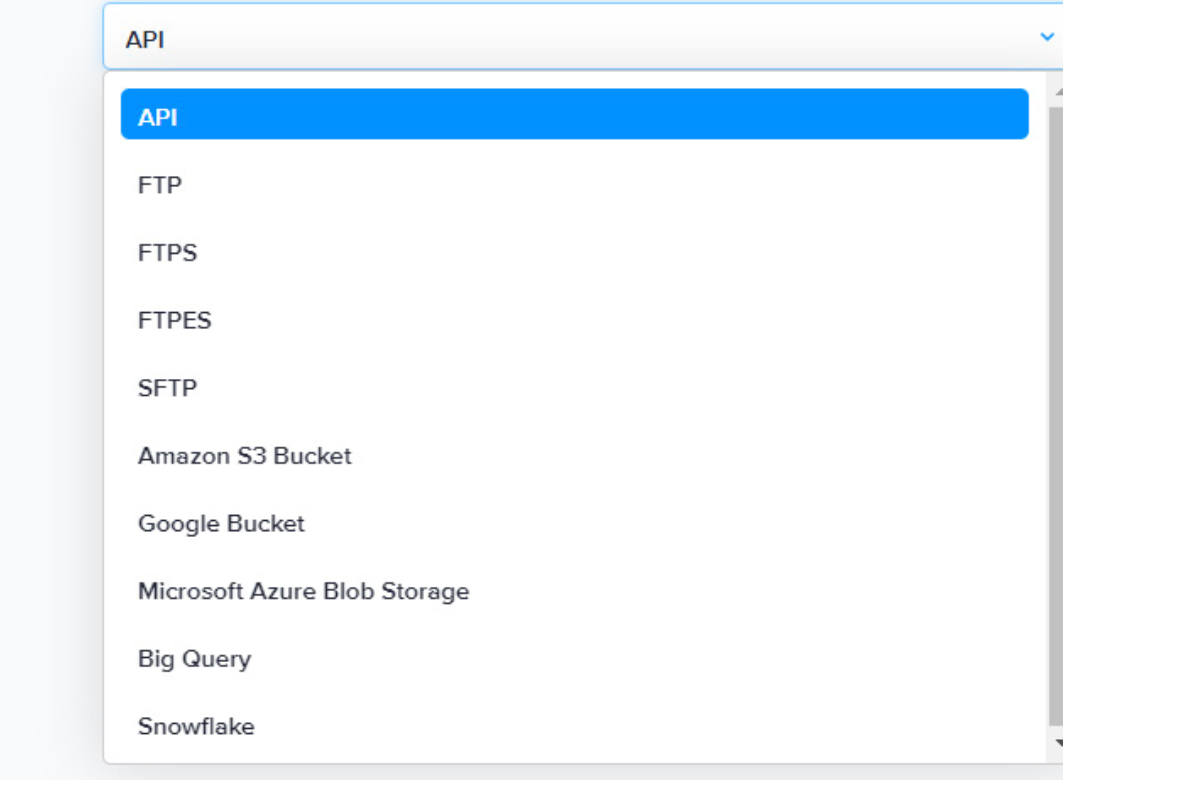

2. Select BigQuery from the list of available connection types.

Zeta uses Key Pair Authentication to connect to your BigQuery instance.

3. Create a Service Account in Google Cloud Console.

Once the key is created, download the JSON key file; you will need it for ZMP configuration.

4. Zeta provides a BigQuery test instance for onboarding and sandbox testing: Zeta Demos Project on Google Cloud Console. [Invite required, reach out to David Harry]

Service Account Key Configuration (Sample)

When adding the connection in ZMP, you'll be asked to provide details from your service account JSON. The required format looks like this (example only):

{

"type": "service_account",

"project_id": "zeta-demos",

"private_key_id": "xxxxxxx",

"private_key": "-----BEGIN PRIVATE KEY-----\n...",

"client_email": "bq-services@zeta-demos.iam.gserviceaccount.com",

"client_id": "xxxxxxx",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

...

}There is a parameter called tmp_dataset. If unsure what to enter, use: zeta-demos

Snowflake Integration (Client-Side Encrypted Data)

You can also integrate Snowflake for secure, encrypted data analysis via named stages. As a first step, create a Named Stage with Encryption.

Use a CREATE STAGE SQL command with a MASTER_KEY parameter:

CREATE STAGE encrypted_customer_stage

url='s3://customer-bucket/data/'

credentials=(AWS_KEY_ID='ABCDEF' AWS_SECRET_KEY='12345678')

encryption=(MASTER_KEY='eSxX...')The MASTER_KEY must be a Base64-encoded AES key (128-bit or 256-bit).

Load Encrypted Data into Snowflake

Create a table and copy the data from your encrypted stage:

CREATE TABLE users (

id bigint,

name varchar(500),

purchases int

);

COPY INTO users

FROM @encrypted_customer_stage/users;To unload data into the stage:

CREATE TABLE most_purchases AS

SELECT * FROM users ORDER BY purchases DESC LIMIT 10;

COPY INTO @encrypted_customer_stage/most_purchases

FROM most_purchases;Data files are encrypted with your MASTER_KEY and can be downloaded using any compatible client/tool.