A/B/n Testing

Besides helping identify errors and subsequently remove them, testing also helps compare actual and expected results in order to improve quality. Ensuring that your expectations come to life, the Zeta Marketing Platform enables marketers with the ability to test subject lines and/or content both automatically and manually with up to ten variants across Broadcast and Triggered campaigns.

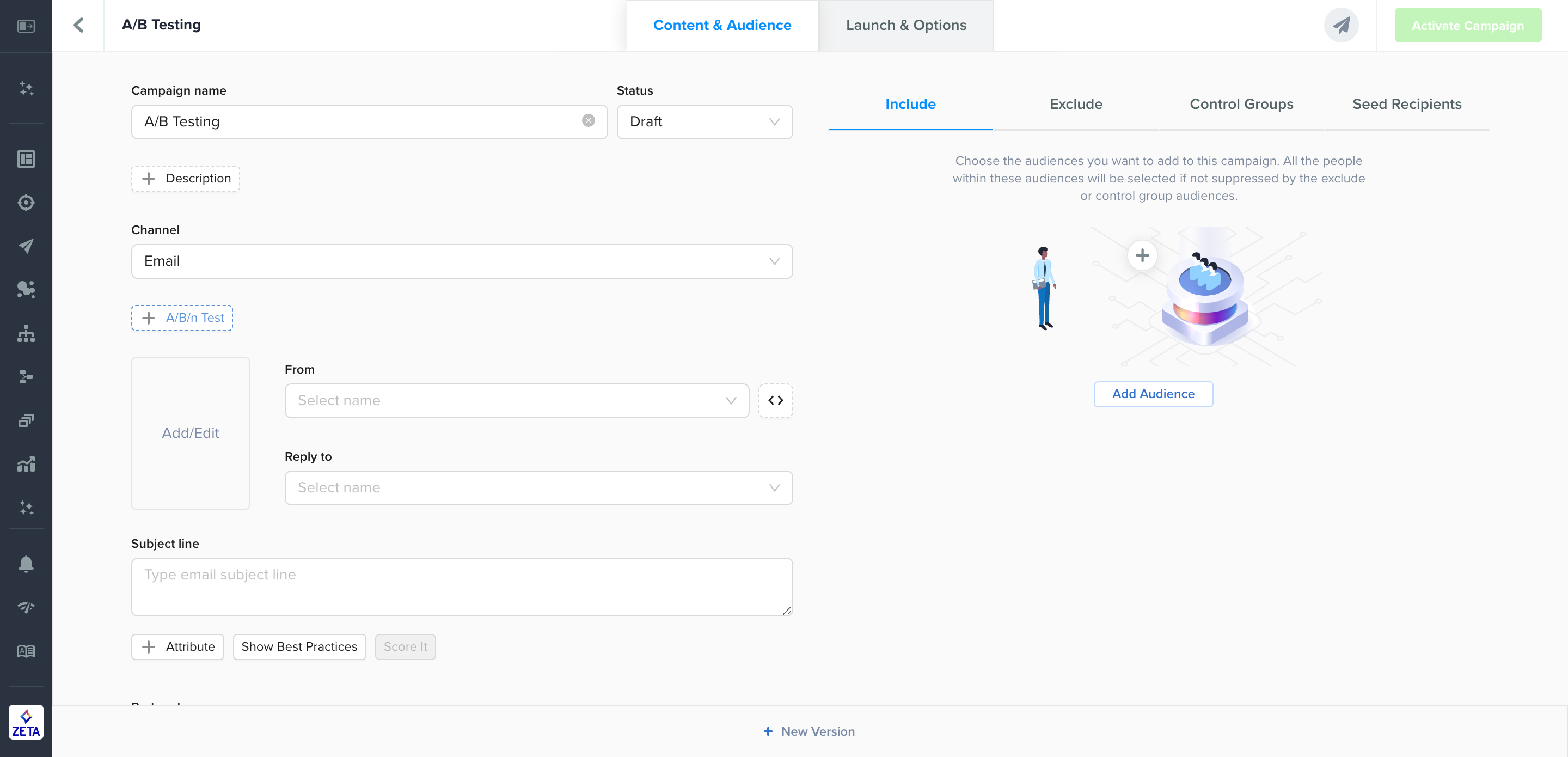

Enabling A/B/n Testing

To enable A/B/n testing for your Broadcast campaign, click on + Add an A/B test option under the Content & Audience tab. For Triggered campaigns, this is located on the main page.

While A/B/n testing is enabled, it will run for every instance of the campaign, including any recurring type of campaign. This applies to both manual and automated tests.

The A/B Testing option will not be available for enabling if the Prime Time is enabled.

Managing Variants

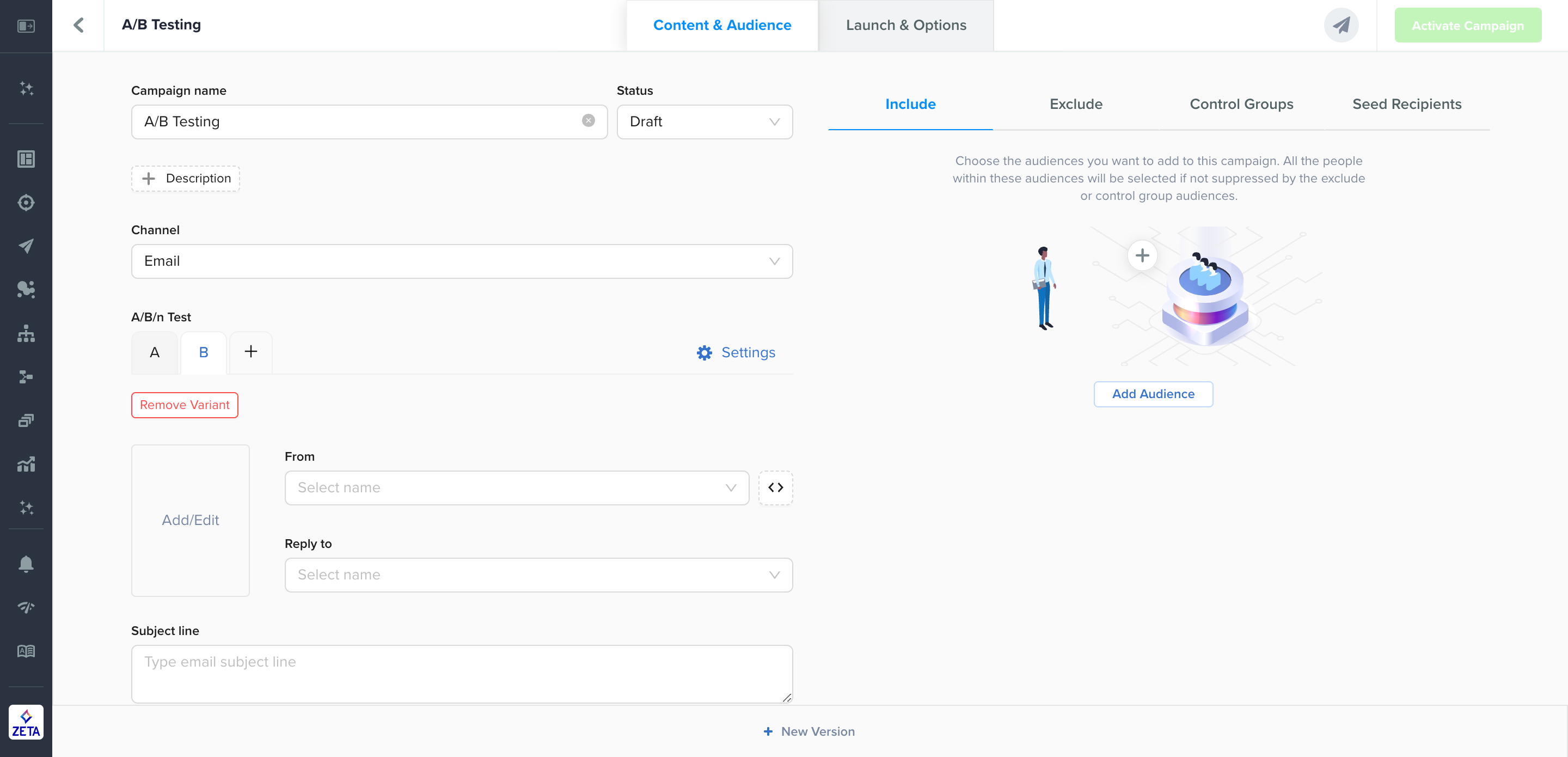

Adding a Variant

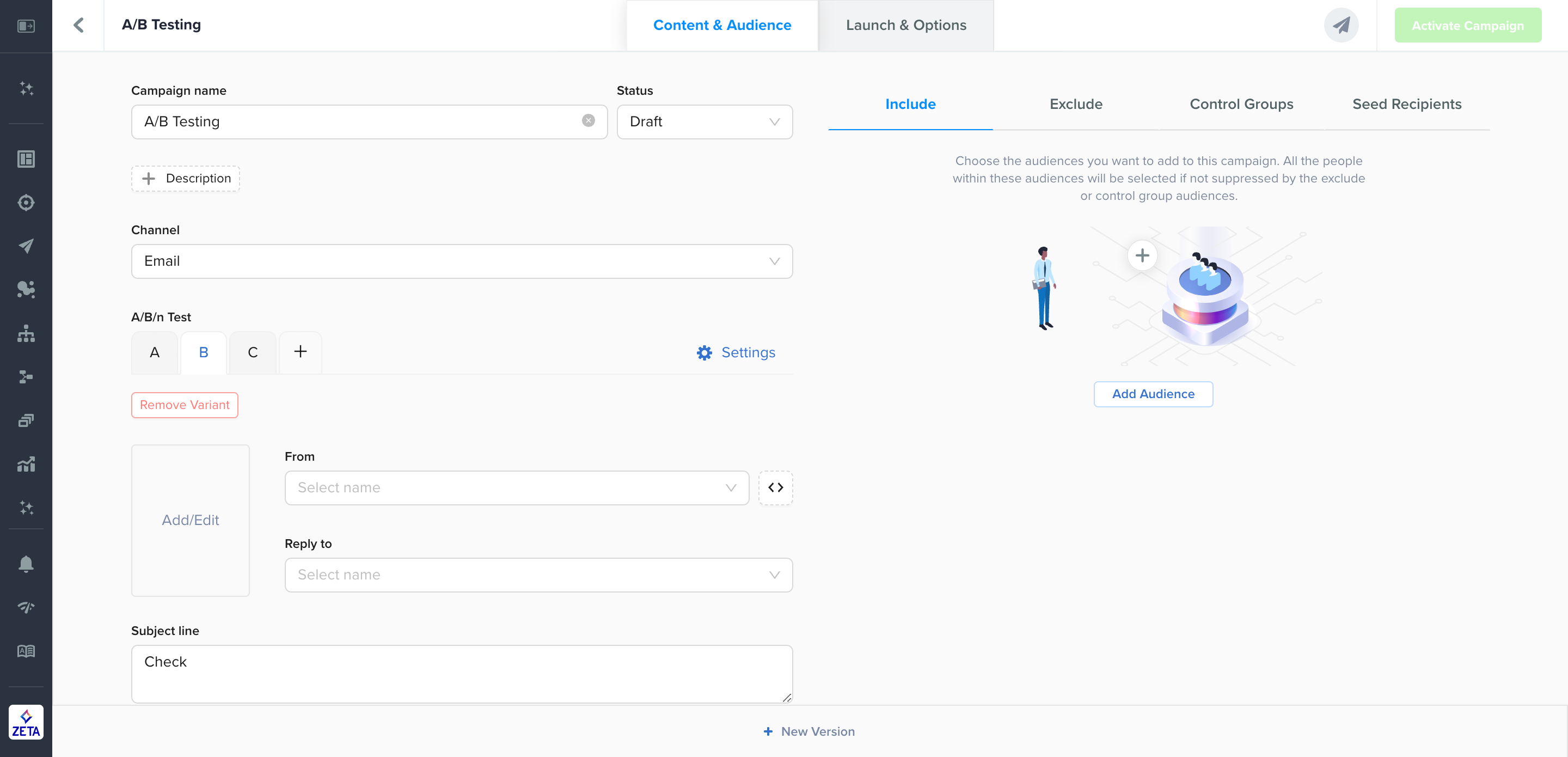

To add an additional variant beyond the default A/B variants, click the + icon to the right of the A and B. You can add up to 10 variants (A-J). Each additional variant will be duplicated from the most recent variant (eg, C is a duplicate of B). You can return to each variant to edit by selecting the variant letter.

Removing a Variant

To remove a variant, select the letter of the variant you want to remove and click Remove Variant. If you remove a variant in the middle of the letters (eg, remove B between A and D), then all variant letters will be shifted to the left (eg, removing B will make C become B and D become C). Once you remove a variant, it cannot be undone.

Removing an A/B/n Test

To disable A/B/n testing, remove each variant. Once all but one variant is removed, A/B/n testing will be disabled.

Supported Channels

For Broadcast campaigns, Manual Tests may be executed on any type of channel while Automated Tests may only be executed on native channels:

Email - By default, optimization may be based on Clicks or Opens. If the account has been set up to track conversions and revenue, then these two may also be used as the basis for A/B/n testing.

SMS/MMS - optimization may be based on Clicks only.

Push Notifications - optimization may be based on Clicks or Opens.

Triggered campaigns only support A/B/n testing for email and SMS/MMS.

Configuring A/B/n Testing

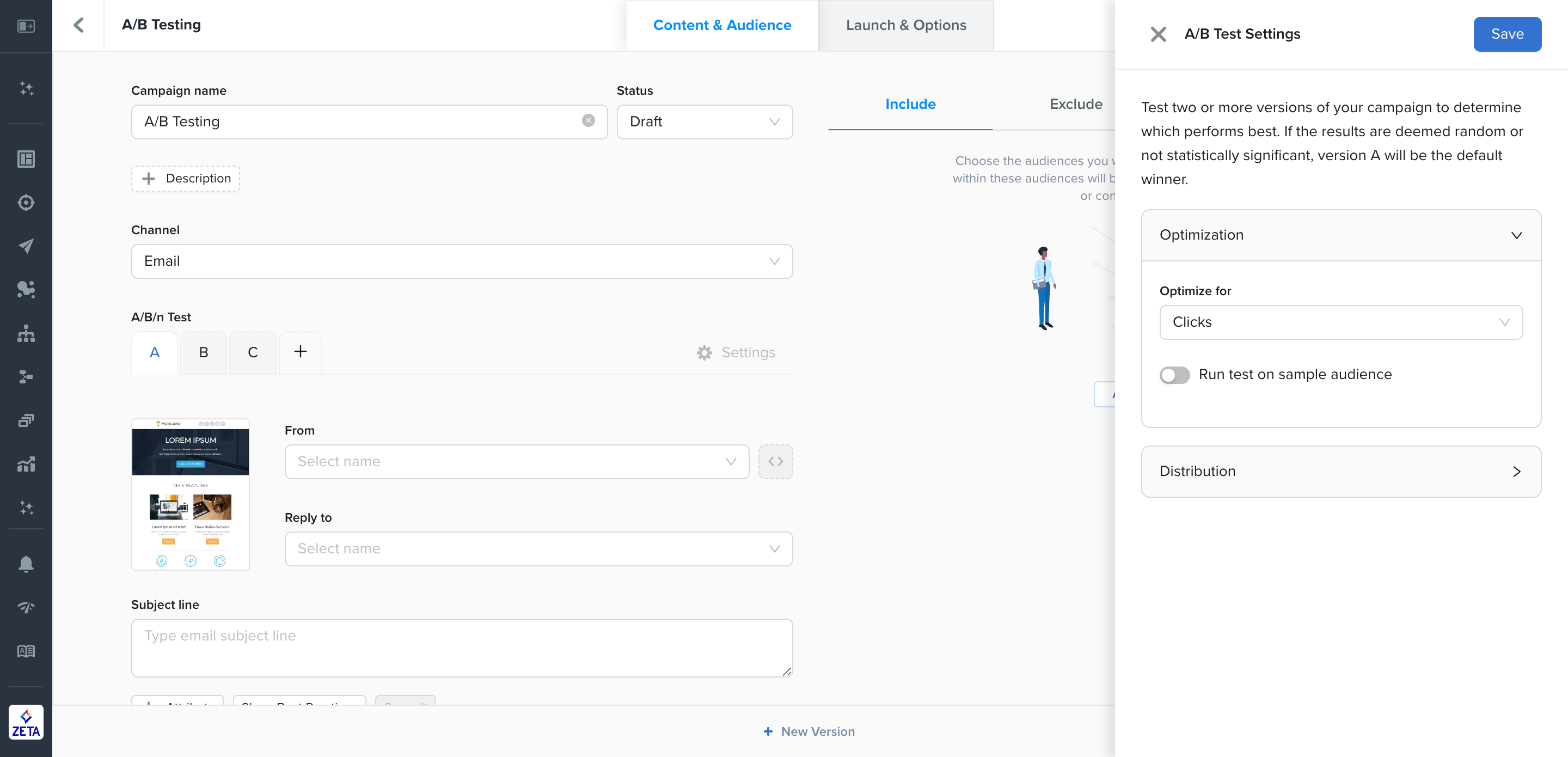

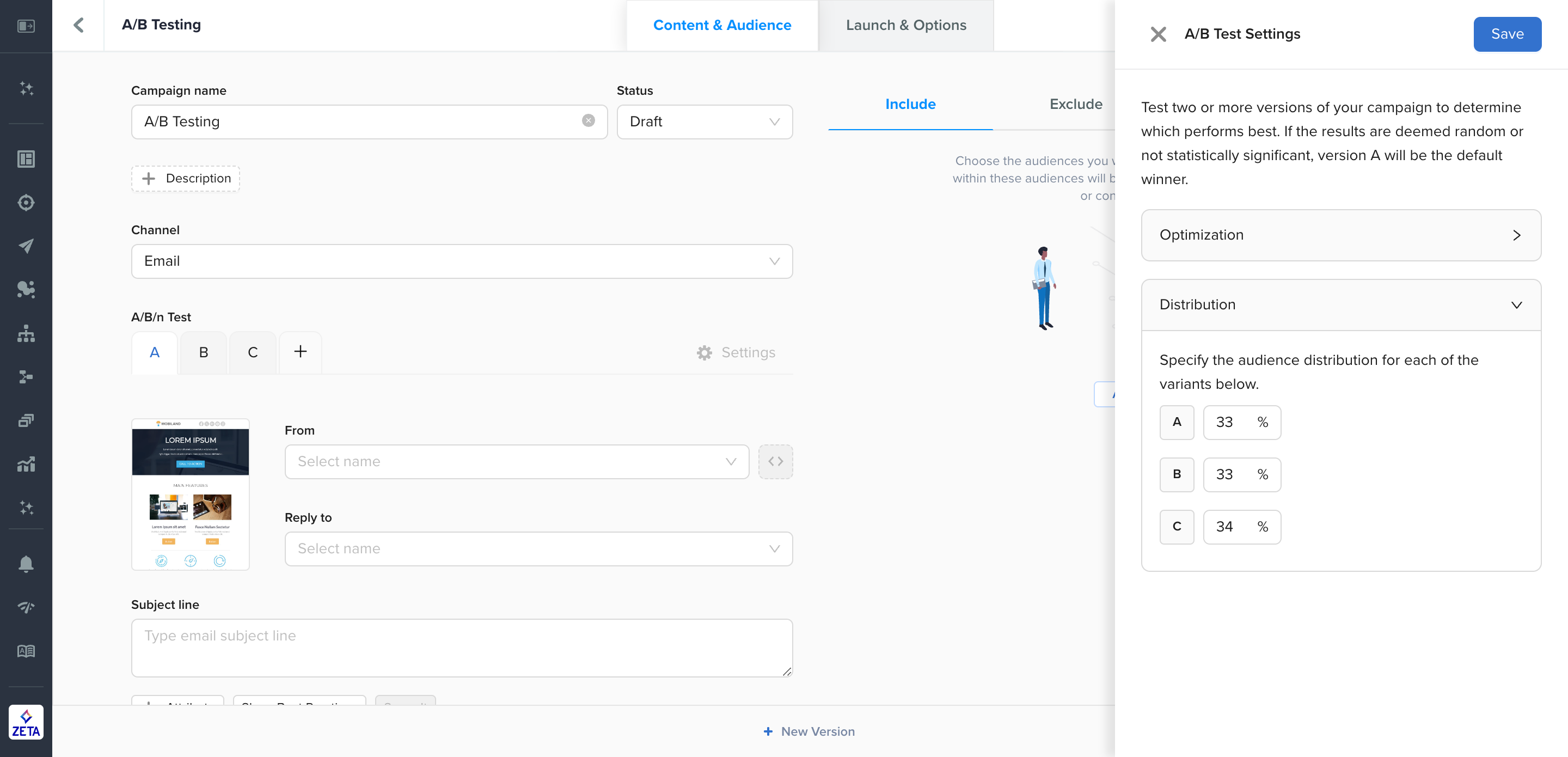

Click on Settings to the right of the A/B/n variants. A menu will slide in from the right side of the window for further configuration.

There are two primary types of tests available: manual and automated. These options are available for all launch types. Keep in mind that while A/B/n testing is enabled, it will run for every instance of the campaign, including any recurring type of campaign. This applies to both manual and automated tests.

Manual Test

Manual tests are typically used to run a longer-term test across multiple campaigns, instances of a recurring campaign, and/or multiple audiences. This is useful when a user wants to maintain control over what the winner ultimately ends up being.

The platform will not select a winner using a manual test. The winner can be chosen by the user by duplicating the campaign used for testing and removing the variant(s) deemed by a non-winner.

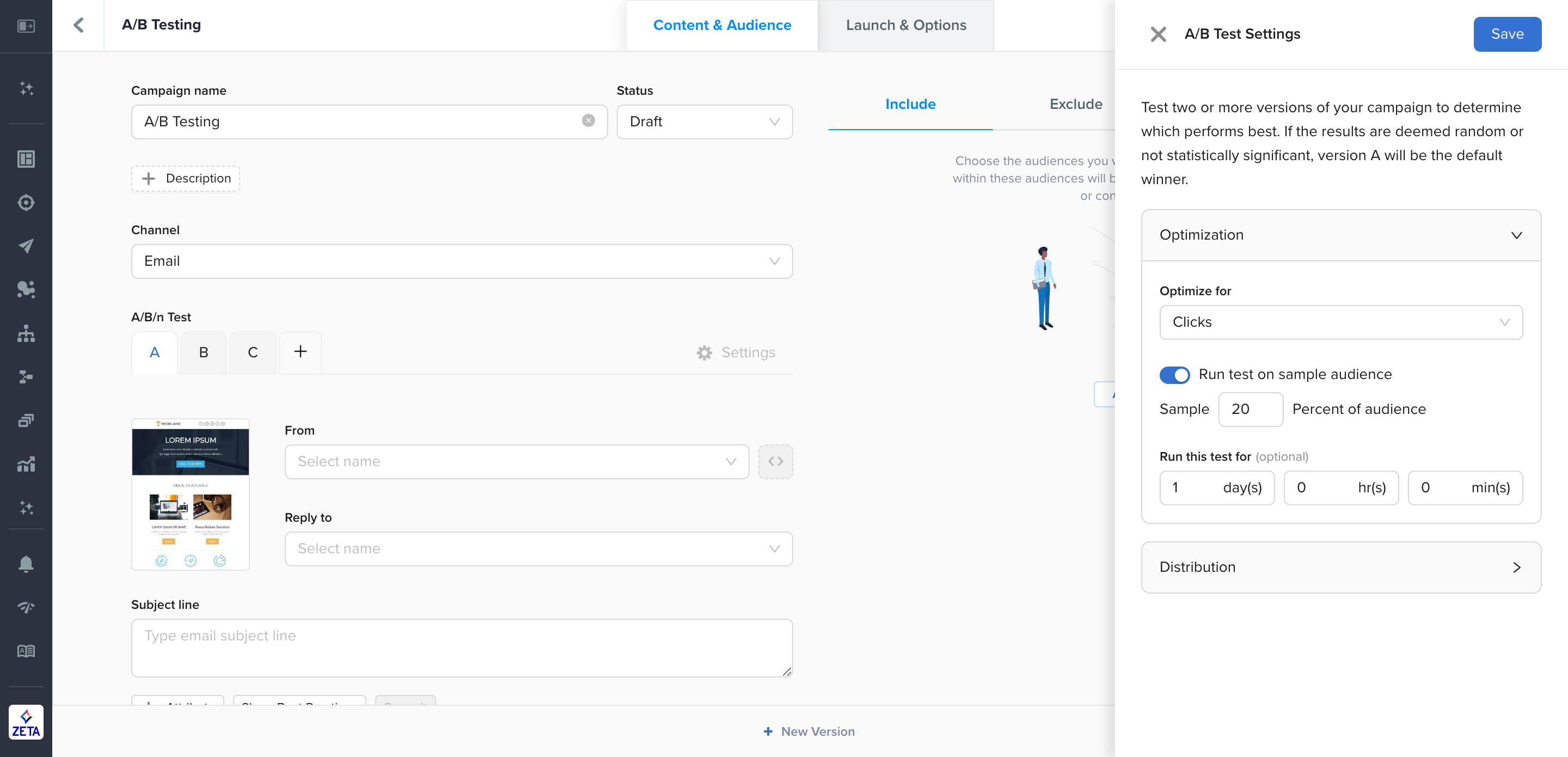

Select the appropriate option to optimize from the first dropdown menu. Clicks are selected by default and Opens has no effect on the test. If your account has Conversions and Revenue configured, they are also available for selection.

Leave the Run test on sample audience option deselected. This is the setting that determines whether the test remains manual or automated.

Set the appropriate distribution for the test. The distribution should add up to 100%.

Automated Test

Broadcast Campaigns

Automated tests are used to optimize the performance of a subject line or content for any given instance of a campaign. For file-based, API-based, or other recurring broadcasts, this means the test will run on a sample audience and choose a winner every time the campaign is deployed. Typically in these scenarios, a manual test is preferred instead of an automated test.

For ad-hoc broadcast campaigns, automated tests can help decide the best subject line or content at a particular instance for which a winner can be automatically chosen from the remainder of that audience. The winner is chosen using null-hypothesis statistical significance.

Select the appropriate option to optimize from the first dropdown menu. Clicks are selected by default and Opens are used for testing subject lines. The Opens option also sets what criteria the platform should use to determine the winner.

Set the amount of time the automated test should run on the sample audience before determining a winner and deploying it to the remainder of the audience.

Set the distribution as per how the test should use the sample audience. This is the percentage of people, the test will be performed prior to selecting a winner. The largest sample audience available is 50% of the greater audience.

The default Optimization time on the account level cannot be changed since it is standard across all accounts.

Triggered Campaigns

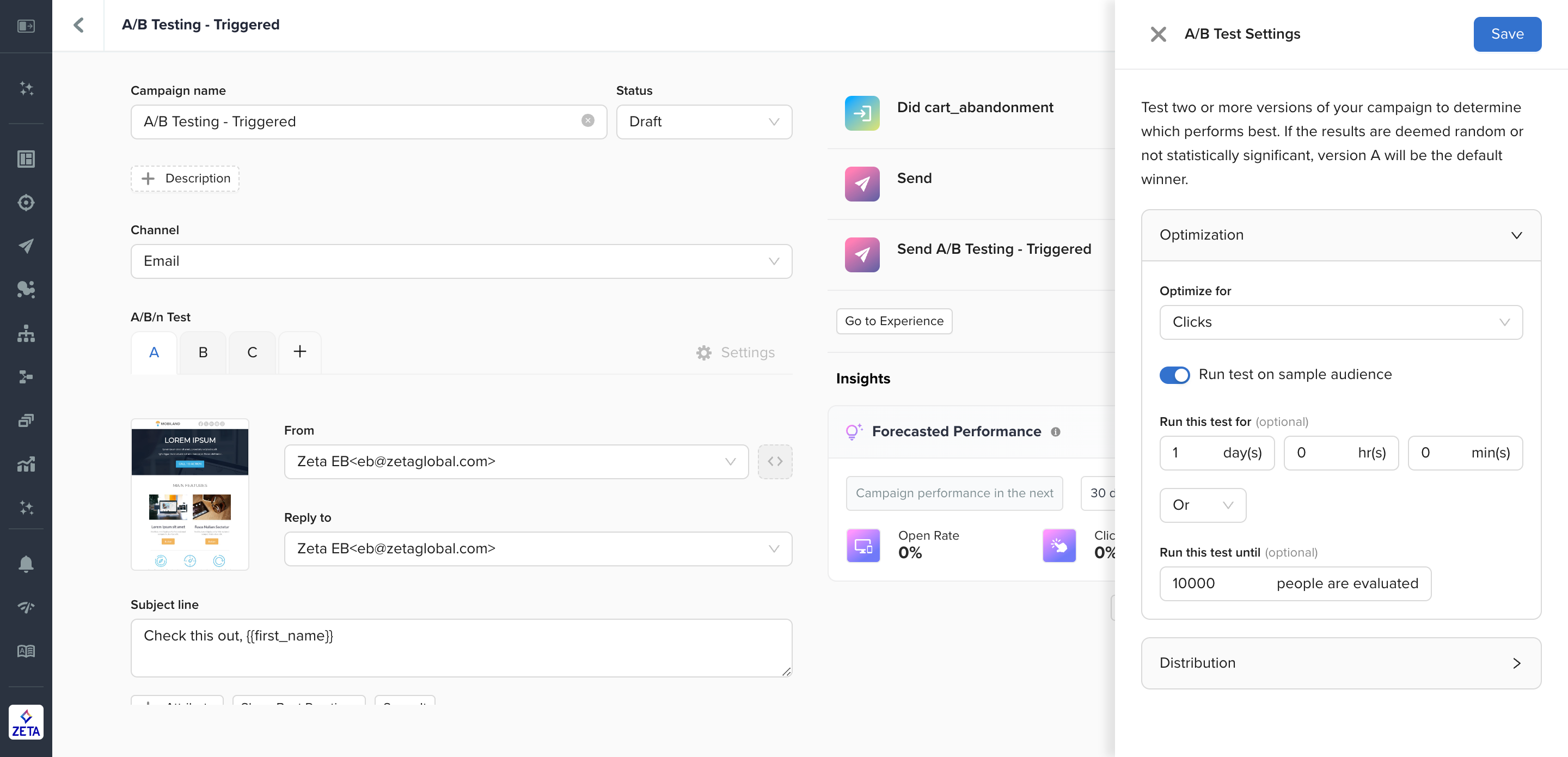

The nature of Triggered campaigns in Experience Builder means that we cannot hold out a portion of the audience since campaigns are time-sensitive and there are event dependencies downstream. Instead, you can configure a sample audience by specifying the minimum amount of time and/or minimum people evaluated before choosing a winner. All users who pass through the node after the test is completed will be sent the winning variant only.

Minimum time: The minimum length of the test. The clock does not start until the first person passes through the node

Minimum people: The minimum number of people required to receive the campaign

If you only want to use one of these settings, you can clear the other. You can also choose to change the operator to AND so that both minimum time and minimum people must be satisfied before a winner is selected.

In order to ensure statistical significance, you should have at least 1000 people per variant configured. If no winner is determined, the A variant will be sent by default.

Once an automated test begins, you cannot make any changes to the test until it’s over. To cancel a test, deactivate the experience and replace the campaign.

If you deactivate an experience with an active test, the clock will stop and then resume at that point when the experience is reactivated.

Validating Automated A/B/n Tests for Statistical Significance

This is a deeply complicated topic, but validating your results doesn't have to be.

In the ZMP, we start with a null hypothesis. This means we start with the assumption that the results from A and B are not different and that the observed differences are due to randomness. This is what we hope to reject. If we can't reject it, ZMP will default to A as the winner.

ZMP has a set significance level of .05. This is an industry-standard level of risk we’re willing to take for detecting a false positive. In other words, 5% of the time, you’ll detect a winner that is actually due to randomness and/or natural variation.

From here, there are many calculators online that can help you determine whether or not your test results are statistically significant.

Here is a basic excel spreadsheet with the formulas already in place. Simply input your delivered and unique open rate for A and B to see a simple Yes or No output: